Implementation for systemd

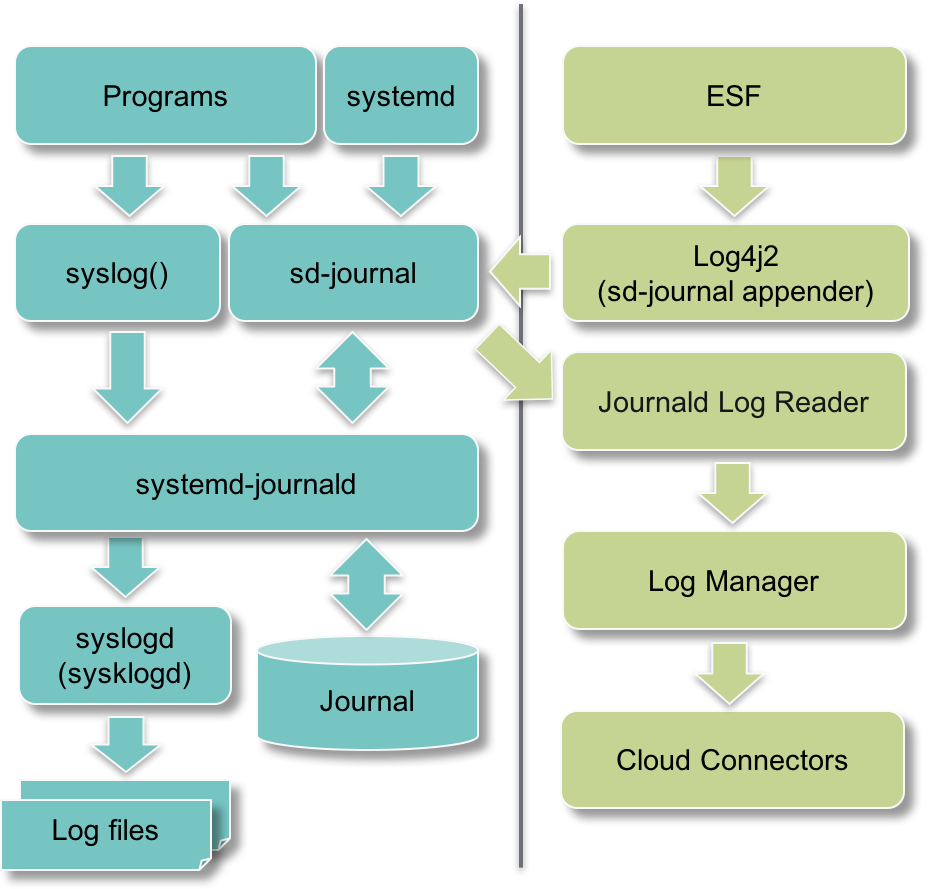

Architectural Overview

The diagram below illustrates the flows of logging data in a Linux distribution with systemd. In such distributions, programs can log either through the POSIX syslog() call or through the sd-journal API of systemd. In both cases, logging data is collected by systemd-journald and stored in the journal, a database that can be kept in memory or written to a file.

The standard output and standard error of systemd units' processes are also collected by systemd-journald unless they have been redirected to file. For example the standard output streams of the kura.service's JVM process are stored in the journal unless they have been redirected to /var/log/kura-console.log.

systemd can be configured to forward logging data to other logging backends such as syslogd to create the well-known text files in the /var/log/ directory.

ESF uses the Log4j 2 logging framework that can be configured to append log events to the journal and optionally to /var/log/kura.log.

The Log Analytics components query log entries from the journal database and apply chains of pattern matching filters to publish significant log entries to a cloud platform at a limited maximum rate.

The transition to the final journal-centric architecture where logging data is no longer forwarded to syslogd and ESF no longer creates log files is subjected to the availability of a logging configuration able to reliably persist the journal on an eMMC storage device.

Log4j Setup

In order to log to the journal, the feature ships the log4j-systemd-journal-appender and a new Log4j configuration file.

The appender maps the Log4j log level of a log event to the journal PRIORITY field of a log entry.

Log4J Level to Journal Priority Mapping

The mapping between the Log4j log level and the journal PRIORITY field is as follows:

Log4j Level | Journal PRIORITY |

|---|---|

FATAL | 2 |

ERROR | 3 |

WARN | 4 |

INFO | 6 |

DEBUG | 7 |

TRACE | 7 |

Log4j Configuration File

The following log4j.xml configuration file appends log events only to the journal and does no longer create the file /var/log/kura.log. It is still possible to append log events both to the journal and to /var/log/kura.log combining it with the Log4j configuration shipped with earlier releases of ESF.

<?xml version="1.0" encoding="UTF-8"?>

<!--

Copyright (c) 2020 Eurotech and/or its affiliates

All rights reserved.

-->

<Configuration status="warn" strict="true" name="KuraConfig" monitorInterval="30" packages="de.bwaldvogel.log4j">

<Filter type="ThresholdFilter" level="trace"/>

<Appenders>

<SystemdJournal name="journal" logStacktrace="true" logSource="false" syslogIdentifier="${env:ESF_LOGGER_NAME:-ESF}" syslogFacility="20" logLoggerAppName="ESF"/>

</Appenders>

<Loggers>

<Logger name="org.eclipse" level="info" additivity="false">

<AppenderRef ref="journal" />

</Logger>

<Root level="info">

<AppenderRef ref="journal"/>

</Root>

</Loggers>

</Configuration>As it can be seen, the Log4j configuration file has been updated to load the package de.bwaldvogel.log4j of log4j-systemd-journal-appender.

The new journal appender, whose type is SystemdJournal, creates log entries in the journal with SYSLOG_FACILITY set to the string "20" and SYSLOG_IDENTIFIER set to the string "ESF". The name of the Log4j Logger used to log a message is assigned, as a string, to the custom field ESF_LOGGER of the journal log entry through the XML attribute logLoggerAppName.

The configuration of Loggers references only the journal appender.

ESF Log Analytics Default Components

The ESF implementation of the Log Analytics APIs bundles 3 main factory components:

- Journald Log Reader

- Log Manager

- Log Publisher

Journald Log Reader

The Journald Log Reader implements the LogReader APIs and provides an implementation that is capable to interact with the system journal by reading the journal entries and producing LogEntry instances that can be further processed.

The Journald Log Reader component supports storing in a file an identifier (cursor) of the last log entry read, so that it is possible to resume reading from it after a framework restart. The Journal Cursor Path configuration property defines the path of such file, if left empty the journal will be read from the beginning every time the framework is restarted.

The default value for the Journal Cursor Path is empty, this is suitable for the cases when the journal is kept in RAM only. It is recommended to set a value for this property if the journal is persisted.

ESF 7.0 default configuration sets Journal Cursor Path to /var/log/journal/journal.cursor on the platforms/OS images where journald is configured to use persisted storage by default in OS factory configuration.

Default Journal Fields

As described in the official Journald documentation, the fields defined in a journal entry can be freely defined by the applications that are writing to the journal.

In a journal entry can be found:

- User Journal Fields: directly passed from clients and stored in the journal.

- Trusted Journal Fields: fields prefixed with an underscore are trusted fields, i.e. fields that are implicitly added by the journal and cannot be altered by client code.

- Kernel Journal Fields: Kernel fields are fields that are used by messages originating in the kernel and stored in the journal.

- Fields to log on behalf of a different program: Fields in this section are used by programs to specify that they are logging on behalf of another program or unit.

All those fields with special meanings are optional.

The ESF implementation is flexible to manage all those optional fields and is also capable to parse and manage fields that appear more than once per entry.

To provide consistency between entries read from the journal, the Journald Log Reader always provides a value for the PRIORITY, SYSLOG_FACILITY and SYSLOG_IDENTIFIER fields.

If those values are available in the journal entry, they are left unchanged. But, in the special case they are not available in the journal log entry read, the Journald Log Reader adds some default values as per the following table.

Journal Field | Default Value (if not set) |

|---|---|

PRIORITY | 8 |

SYSLOG_FACILITY | 24 |

SYSLOG_IDENTIFIER | unknown |

Filter Format

The JournaldLogReader component provides a Log filters property that allows to create a log entry filter that is applied by the underlying libsystemd library. This filter provide less flexibility than LogReader ones (e.g. it does not support regexes) but allows to reduce the number of log entries that are processed by ESF framework.

This property allows to specify a JSON array that encodes a sequence of calls to the sd_journal_add_match, sd_journal_add_disjunction, and sd_journal_add_conjunction. See [1] for a detailed description of these calls.

The entries can have the following structure:

-

json object: addssd_journal_add_matchcalls to the sequence.The JSON object must be composed of a set of properties whose value is a

json string.

Each property will add asd_journal_add_matchcall to the sequence.The property key and value encodes the journald entry field name and field value to be matched.

-

ANDjson string: adds asd_journal_add_conjunctioncall to the sequence. -

ORjson string: adds asd_journal_add_conjunctioncall to the sequence.

See [1] for more details about the behaviour of sd-journal API calls above and how to combine them.

Example Log filters configuration property values:

[

{

"_SYSTEMD_UNIT": "kura.service"

}

][

{

"_SYSTEMD_UNIT": "kura.service",

"SYSLOG_FACILITY": "4"

}

][

{

"_SYSTEMD_UNIT": "kura.service"

},

"OR",

{

"_SYSTEMD_UNIT": "named.service",

"SYSLOG_FACILITY": "4"

}

][1] https://www.freedesktop.org/software/systemd/man/sd_journal_add_match.html

Log Manager

The LogManager component is an OSGi factory that provide an implementation for the LogReaderListener API.

Once configured, the LogManager instance registers itself as a Listener in the specified LogReader instance and will be notified of new log entries read in the journal by the invocation of the newLogEntry callback method.

Once the callback has been invoked, the LogManager instance will receive a LogEntry instance containing a map of properties that represents the fields read from the journal entry.

The received LogEntry will be than processed from the LogManager instance and if the entry meets the filtering requirements, it is then sent to the specified cloud platform via the configured cloud publisher.

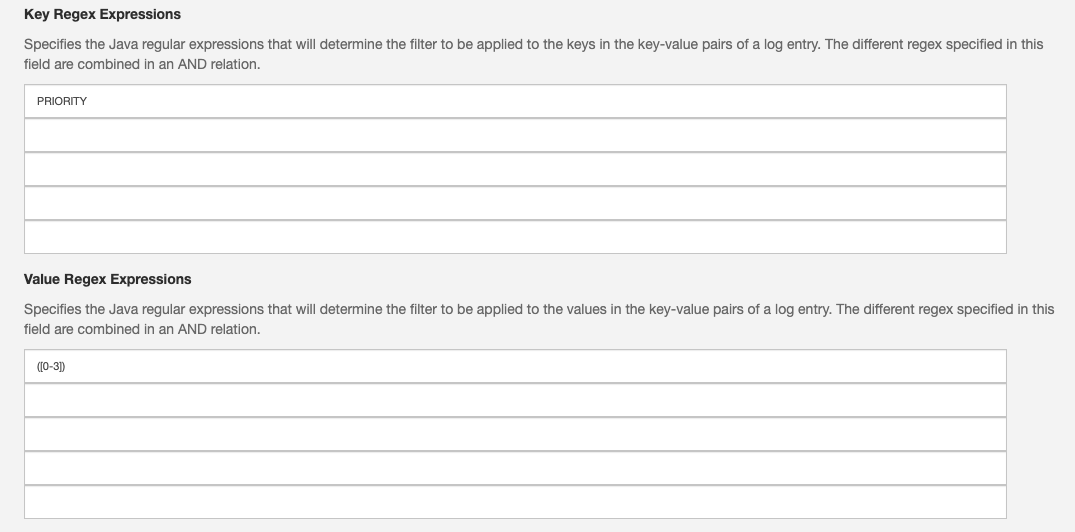

LogEntry Content Filtering

The key-value pairs that compose the properties of a LogEntry are processed in order to find a match with the regular expressions configured in the LogManager instance configuration.

If a match is not found, the LogEntry message is dropped.

In case of a corresponding match, the LogEntry message will became a candidate for publishing to the cloud platform.

In the example above, a LogEntry message will become a candidate for publishing to the cloud if it contains a property with a key equal to PRIORITY and a value in the range 0 (Emergency) to 3 (Error).

The different regexes specified in the Key field are matching in an AND relation. Same for the regexes in the Value field.

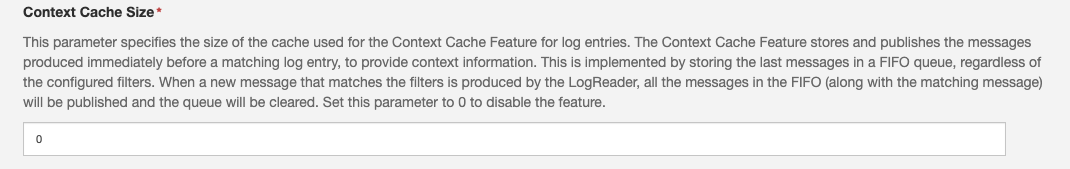

Content Cache Size

The Context Cache Feature stores and publishes the messages produced immediately before a matching log entry, to provide context information.

This is implemented by storing the last messages in a FIFO queue, regardless of the configured filters. When a new message that matches the filters is produced by the LogReader, all the messages in the FIFO (along with the matching message) will be published and the queue will be cleared.

By default, this feature is Disabled (set to 0).

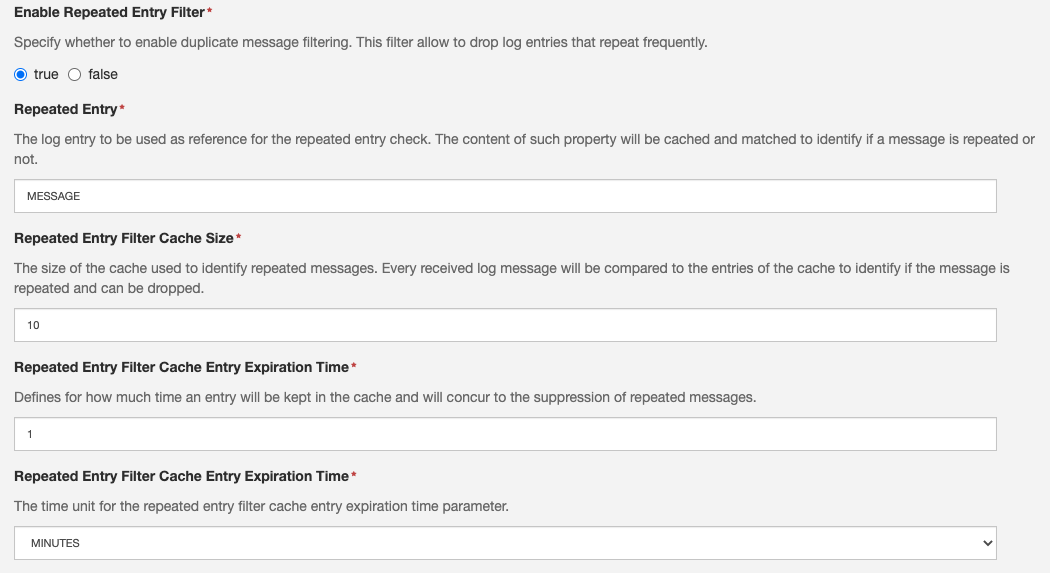

Repeated entry filter

The repeated entry filter maintains a cache of the latest N log entries to detect and drop duplicate entries. Includes a configuration parameter that allows to periodically publish repeated messages.

If applied to DataServiceImpl logger, it will prevent the positive feedback issue while allowing to publish at least once exception messages.

The related configuration parameters are the following:

- Enable repeated entry filter: Specify whether to enable duplicate message filtering.

- Repeated entry filter cache size: The number of past log entries to store for repeated entry search.

- Repeated entry filter cache entry expiration time: Defines the expiration time for the repeated message cache entries. After a cache entry for a given message expires, that message will not be dropped if logged again.

- Repeated entry filter cache entry expiration time unit: The time unit for the repeated entry filter cache entry expiration time parameter.

This filter works as follows:

- The filter maintains a cache whose size is determined by the value of the Repeated entry filter cache size .

- The cache entries are

(message, timestamp, drop_count)tuples. - The cache is structured as a queue ordered according to the entry last access order (see below).

When a new log entry n is generated at instant now, the filter applies the following procedure,

- If there exist a cache entry

ewheree.message == n.MESSAGEeis moved to the top of the cache- if

now - e.timestamp > Repeated entry filter cache entry expiration timenwill be publishede.drop_countwill be set to 0.e.timestampwill be set tonow.

- otherwise

nwill be droppede.drop_countwill be increased.

- otherwise

nwill be published- a new

(n.MESSAGE, now, 0)entry will be added to the top of the cache - if new the cache size is greater than Repeated entry filter cache size, the entry at the bottom of the cache will be removed.

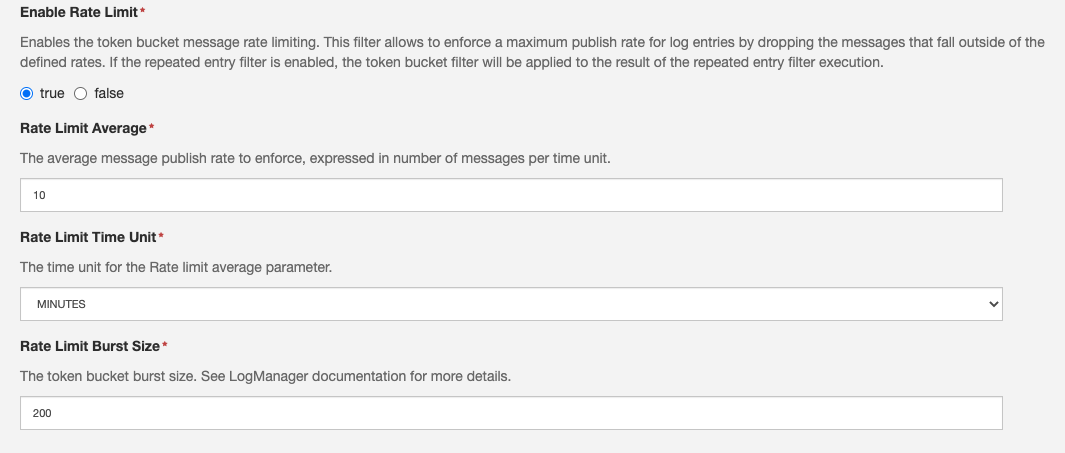

Rate limiting filter

After successfully passing the previous steps, the LogManager implementation applies to the LogEntry a rate limiting filter based on a token bucket algorithm similar to the one applied by the DataService. This filter will drop entries if the publish rate exceeds the configured values.

The related configuration parameters are the following:

- Enable rate limit: Enables the token bucket message rate limiting.

- Rate limit average: The average message publish rate in number of messages per time unit.

- Rate limit time unit: The time unit for the Rate limit average parameter.

- Rate limit burst size: The token bucket burst size.

The filter allows to limit the average number of published messages related to log entries to Rate limit average messages per Rate limit time unit. The Rate limit burst size allows to prevent dropping occasional messages bursts.

This filter implements the token bucket algorithm in the following way:

- The log manager keeps a token counter.

- A log entry can be published only if the token count is greater than zero when the entry is generated. If the token count is zero, the entry will be dropped.

- Publishing a log entry decrements the token count by one.

- The maximum value of the token counter and its initial value is Rate limit burst size.

- The counter is incremented once every 1/

Average publish rate per secondseconds, whereAverage publish rate per secondis derived from the value of the Rate limit average and Rate limit time unit configuration parameters.

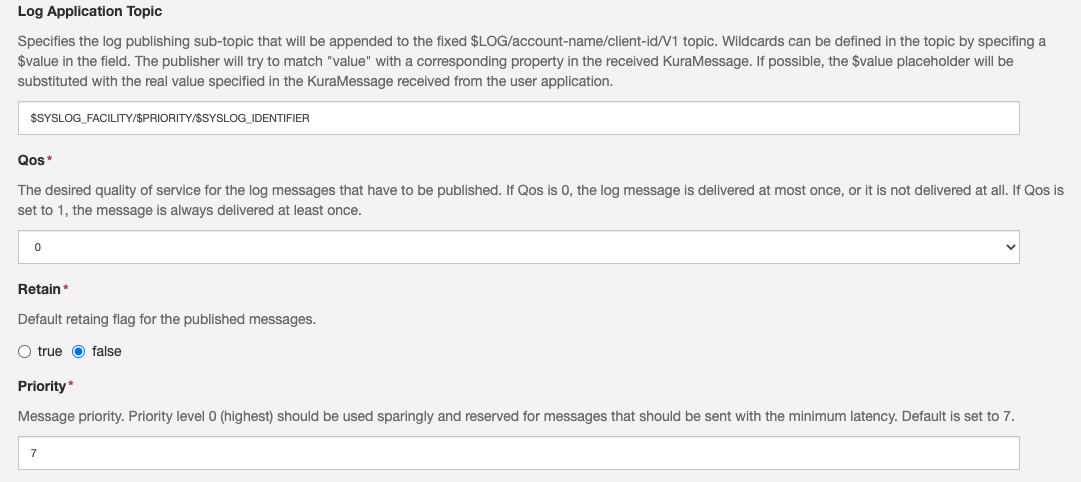

Log Publisher

The LogPublisher is an implementation of the CloudPublisher APIs that provides specific support for publishing log messages to the cloud.

Compared to a default CloudPublisher implementation, for example the one provided by org.eclipse.kura.cloud.publisher.CloudPublisher, the Log Publisher publishes logs in the special topic

$LOG/<account-name>/<client-id>/V1/$SYSLOG_FACILITY/$PRIORITY/$SYSLOG_IDENTIFIERThe Log Publisher provided by the com.eurotech.framework.log.publisher.LogPublisher factory, allows to configure the following parameters:

- Log Application Topic: Specifies the log publishing sub-topic that will be appended to the fixed $LOG/account-name/client-id/V1 topic. Default set to $SYSLOG_FACILITY/$PRIORITY/$SYSLOG_IDENTIFIER

- Qos: The desired quality of service for the log messages that have to be published. Default set to 0.

- Retain: Default retaing flag for the published messages. Default set to false.

- Priority: The Message priority. Default set to 7.

Updated 3 months ago